AI is moving faster than most organizations can absorb, and the gap between ambition and reality is widening. Here we explore why most enterprises struggle to deploy AI profitably, how risks are escalating across legal, operational, and organizational dimensions, and why responsible AI governance and independent audit are now essential for sustainable competitive advantage.

AI is moving faster than most organizations can absorb, and the gap between ambition and reality is widening at an uncomfortable rate. Investment is exploding, expectations are sky-high, and yet most enterprises still aren’t seeing real ROI. At the same time, risk—operational, legal, ethical, and financial—is quietly compounding beneath the surface. If your AI initiatives aren’t supported by strong governance and disciplined oversight, the question isn’t whether something breaks. It’s when. And what it will cost you.

AI is already improving lives, strengthening industries, and reshaping how organizations operate. You probably see the promise every day. But as with any rapidly evolving technology, leaders tend to fall into two camps: the “all-in” adopters pushing hard to get ahead, and the skeptics who see only vulnerabilities and potential existential threats. Neither extreme helps an enterprise navigate AI responsibly.

The truth is more practical. AI isn’t an existential risk to humanity—but it is an existential risk to your business if you adopt it faster than you can govern it. And with the market accelerating, governance is quickly becoming the differentiator between those who scale AI effectively and those who stall out under the weight of technical debt, compliance gaps, and reputational harm.

Consider the current landscape: U.S. private AI investment grew to $109.1 billion in 2024, according to the Stanford 2025 AI Index Report. Innovation is happening everywhere—agentic systems, medical AI devices, multimodal reasoning, and enterprise automation. AI capabilities are advancing faster than ever, radically shortening the distance between an idea and a working prototype. And organizations are responding: global enterprises are allocating more resources, approving more pilots, and pushing for AI acceleration across their operations.

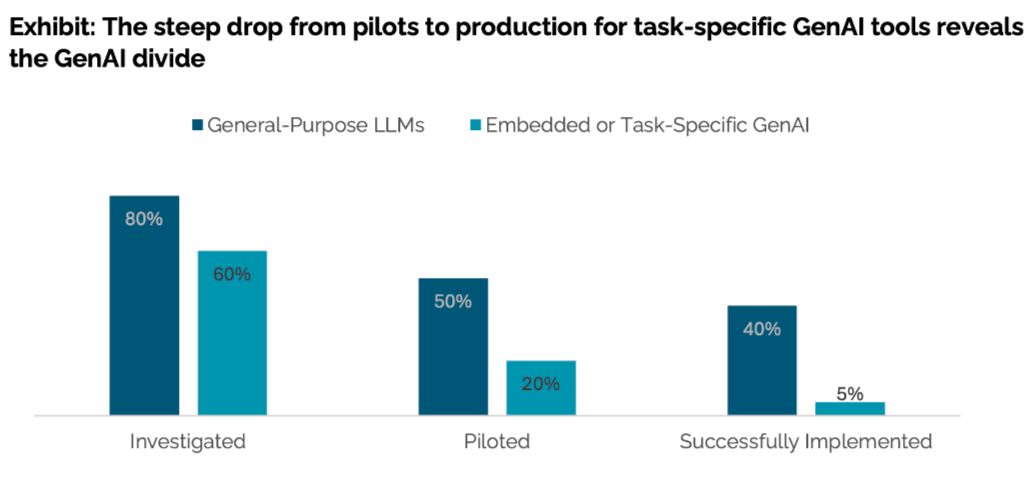

Yet the business outcomes are telling a different story. Most organizations report limited financial impact from GenAI adoption. McKinsey’s State of AI 2025 found that more than 80% of leaders still see no enterprise-level EBIT contribution from AI. MIT’s NANDA research reached an equally sobering conclusion: 95% of organizations are getting zero measurable GenAI return, with only 5% of pilots ever reaching successful production.

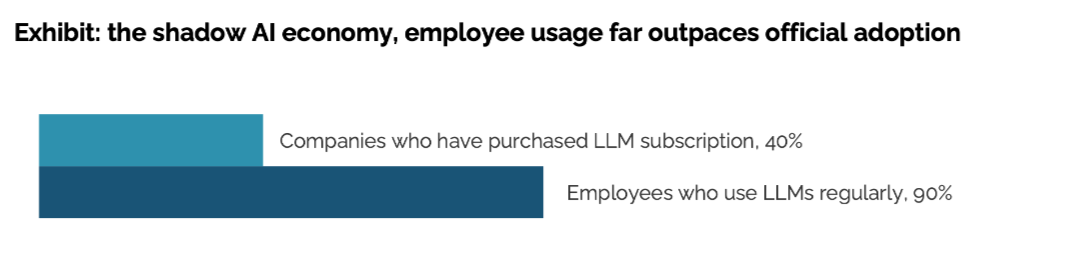

In many cases, employees are racing ahead with unsanctioned tools—a growing shadow AI ecosystem—while official initiatives remain trapped in silos. The disconnect is stark. And in regulated industries such as financial services, healthcare, and life sciences, that misalignment isn’t just inefficient. It’s costly and potentially dangerous.

Writer’s 2025 Enterprise AI Survey highlights the friction clearly:

Risk is scaling alongside innovation. As AI becomes more autonomous—often described as agentic AI—and more deeply embedded in workflows, its potential to impact human agency, safety, and well-being grows. But the organizational response remains inconsistent. The “all-in” group underweights risk because of competitive pressure; the “doomsday” group overweights risk and slows progress to a crawl. Neither approach works in a world where compliance requirements shift monthly and case law is being shaped in real time.

The consequences of poorly governed systems are already visible, from high-profile failures like those cataloged in CIO’s analysis of AI disasters to real legal exposure, such as the Air Canada chatbot ruling, where an airline was held liable for a model’s inaccurate and misleading customer guidance. Regulators have also begun cracking down on AI misrepresentation, issuing the first federal AI-washing fines against investment advisers for misleading claims about their use of AI. Litigation tied to AI errors is accelerating, as reflected in the George Washington University AI Litigation Database.

In high-risk domains—healthcare diagnostics, credit scoring, clinical decision-support, fraud detection—the consequences of unmanaged model behavior can escalate rapidly. And as systems evolve over time, unmanaged model drift quietly increases error rates until a failure becomes unavoidable.

AI governance is the foundation for responsible and effective AI adoption. It establishes the policies, controls, processes, and accountability mechanisms that ensure AI systems operate reliably and in alignment with business objectives. Governance must come first because it defines how an organization evaluates risk, measures performance, identifies failure modes, and determines when a system is ready for real-world use.

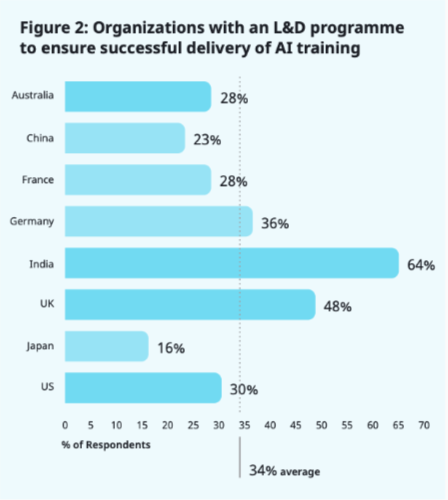

A mature governance program spans people, processes, and technology. As organizations operationalize AI, it becomes clear that the “people” dimension is often the least mature. Employees are responsible for interpreting outputs, monitoring model behavior, escalating issues, and making judgment calls when AI interacts with customers or critical workflows. Governance doesn’t depend on this readiness to exist — it exposes the readiness gaps that must be addressed for AI to scale safely.

BSI’s findings illustrate one of these gaps: learning and development programs have not kept pace with AI adoption. Many teams lack the training needed to meet the responsibilities assigned to them by governance frameworks. This isn’t a substitute for governance, nor does it come first — rather, it demonstrates how governance reveals organizational weaknesses and guides the investments required to achieve AI maturity.

Research consistently shows the payoff. Strong responsible AI practices improve business efficiency, reduce operating costs, and increase customer trust. Early detection of issues—hallucinations, drift, misalignment—can turn an expensive remediation crisis into a manageable correction cycle.

Governance closes the gap between risk and execution. Auditing verifies it.

An external auditor brings neutrality, structure, and expertise your internal teams simply can’t replicate. Independent AI auditing evaluates your entire lifecycle:

It uncovers blind spots, verifies regulatory requirements, strengthens accountability, and ensures your systems perform reliably under real-world conditions. More importantly, it helps your teams move faster with confidence by eliminating ambiguity and catching issues early.

This is increasingly critical as legal expectations evolve. Developers and enterprises are increasingly being pulled into tort discussions, and exposure is rising, as highlighted in RAND’s U.S. Tort Liability for Large-Scale AI Damages. Courts are still determining how to treat AI-driven harm, but the trend is clear: organizations will be held responsible for what their systems do.

If you’re questioning your organization’s exposure, the maturity of your governance program, or the reliability of your AI initiatives, the next step is clear.

AuditDog.AI can help you build and validate the structures required to deploy AI responsibly, safely, and profitably—protecting your investments and positioning you to move faster with confidence.